The Role of Cybersecurity AI Assistants: An In-Depth Analysis of Gartner's 2024 Hype Cycle

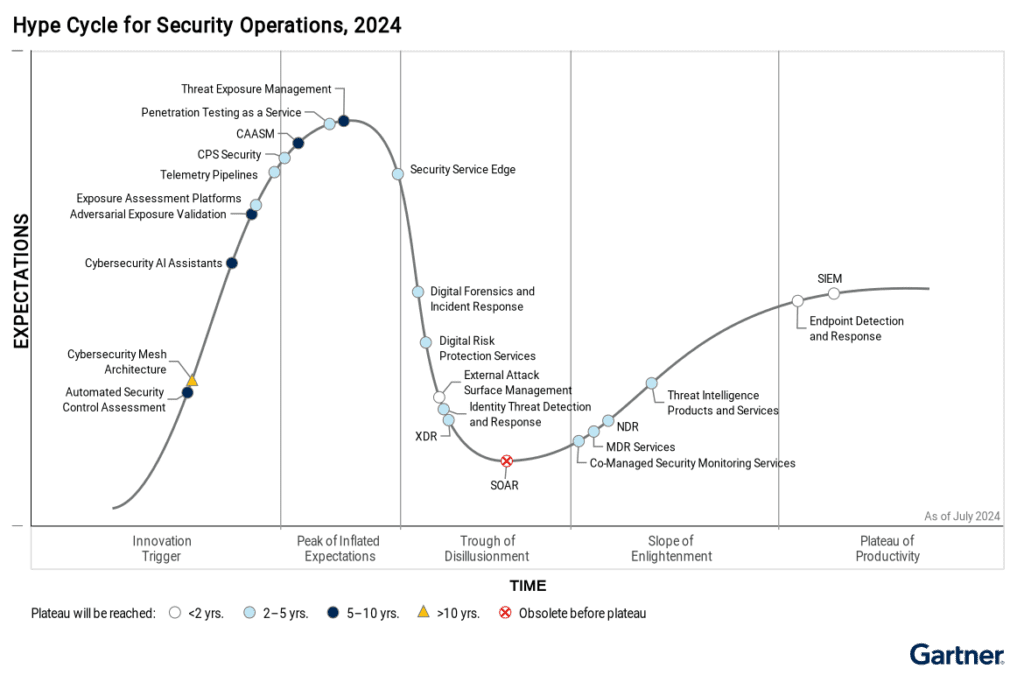

Cybersecurity AI assistants are on the brink of transforming the way organizations handle their security needs, yet their adoption remains slow, with less than 1% market penetration. In Gartner’s 2024 Hype Cycle, analysts Jeremy D’Hoinne, Avivah Litan, Wilco van Ginkel, and Mark Horvath deliver a comprehensive view of this emerging technology, offering insights into its potential, obstacles, and future trajectory.

However, while Gartner addresses several crucial aspects of these AI assistants, key areas are overlooked, particularly concerning the ethical risks, adversarial threats, and governance frameworks that are vital for building trust and ensuring successful deployment.

This article takes Gartner’s analysis as a foundation, expands on the critical gaps they missed, and proposes a more holistic view of cybersecurity AI assistants.

Cybersecurity AI Assistants: What They Are and Why They Matter

Cybersecurity assistants leverage large language models (LLMs) to assist security teams by discovering existing knowledge from security tools and generating content relevant to specific roles. They help streamline various tasks such as incident response, code review, risk management, and threat intelligence synthesis. By integrating with existing cybersecurity platforms or acting as dedicated agents, these AI assistants promise to enhance productivity and reduce the strain on already overwhelmed security teams.

Gartner emphasizes the role of these assistants in addressing the global shortage of cybersecurity talent, where automation becomes essential for managing increasingly complex and sophisticated threats.

Use Cases for Cybersecurity AI Assistants

Gartner correctly identifies several high-value use cases for these AI-driven tools, including:

- Incident response: Automating initial investigation steps and generating remediation suggestions.

- Threat intelligence: Synthesizing large volumes of threat data to aid in quicker decision-making.

- Code review: Supporting application security teams in reviewing code for vulnerabilities.

- Security operations: Automating detection, analysis, and response tasks in security operations centers (SOCs).

These use cases are especially relevant for organizations struggling with limited resources or those dealing with increasingly sophisticated threats. However, the pace of adoption will likely vary based on organizational maturity and the nature of the tasks being automated.

Key Insights from Gartner

Skill Augmentation, Not Replacement: Gartner astutely notes that AI assistants should be viewed as tools to augment, not replace, skilled cybersecurity professionals. Their ability to automate routine tasks allows experts to focus on more complex and critical issues, enhancing both productivity and response times.

Hallucinations and Trust Issues: A significant concern addressed by Gartner is the risk of AI hallucinations, where generative AI provides inaccurate or misleading information. In cybersecurity, even minor errors could have catastrophic consequences, making organizations cautious about adopting AI assistants for mission-critical tasks. This is a valid and important observation, as establishing trust in AI systems is fundamental to their successful integration.

Incremental Adoption and Caution: Gartner advises organizations to experiment cautiously with AI assistants, emphasizing that initial implementations may have a higher error rate. This recommendation reflects a sound understanding of how AI technologies evolve and mature, and how errors in the early stages could erode confidence in the technology.

Gaps in Gartner’s Analysis

While Gartner’s report offers valuable insights, several critical gaps could hinder a broader understanding of the real challenges and opportunities surrounding AI assistants in cybersecurity.

1. Ethical and Legal Considerations: The Missing Dimension

Gartner only briefly touches on the ethical and legal implications of cybersecurity AI assistants. Issues such as AI bias, decision transparency, and privacy are vital, especially as AI systems are increasingly used in regulated industries. Without proper governance around how AI handles sensitive data or generates critical security recommendations, organizations may face significant legal and compliance risks.

Moreover, responsible AI use—ensuring fairness, accountability, and transparency—is becoming a key concern for businesses adopting AI. For cybersecurity, where AI might recommend or take automated action, clear ethical guidelines are necessary to prevent unintended harm.

2. Adversarial AI: A Growing Threat

Another significant gap in Gartner’s analysis is the absence of any discussion on adversarial AI attacks. As AI becomes more prevalent in cybersecurity, hackers could exploit vulnerabilities within AI systems themselves, launching adversarial attacks to manipulate AI outputs. Techniques such as data poisoning or model evasion could lead AI systems to make dangerous decisions, such as ignoring real threats or misconfiguring security tools.

For organizations relying on AI for critical cybersecurity tasks, adversarial attacks pose a serious risk that could undermine the very purpose of adopting AI-driven solutions.

3. Human-AI Collaboration: Fostering Trust and Adoption

Gartner emphasizes that AI assistants are meant to augment human teams, but they fail to explore the nuances of human-AI collaboration in detail. How security professionals interact with AI assistants—whether they trust AI-generated recommendations and how they review and validate AI outputs—are essential factors for successful adoption.

Building trust between humans and AI requires mechanisms to ensure transparency in AI decision-making and the ability for humans to override AI actions when necessary. This dynamic interaction is critical for the successful integration of AI assistants into security operations.

4. Governance and Auditability: Ensuring Accountability

Another major oversight in Gartner’s report is the lack of discussion around the need for AI governance and auditability. As AI becomes more embedded in security processes, organizations need robust frameworks to monitor and audit AI decisions. Ensuring accountability, tracking AI-driven actions, and maintaining a documented decision trail will be critical, especially for regulated industries where compliance is paramount.

By implementing governance frameworks, organizations can mitigate risks, ensure transparency, and maintain trust in AI systems. The absence of such frameworks could lead to security gaps, compliance issues, and mistrust from internal stakeholders.

5. Cost Considerations: Hidden Expenses of AI Implementation

Gartner briefly mentions uncertainty around pricing but does not fully explore the hidden costs of implementing AI assistants. Beyond licensing fees, there are additional costs related to infrastructure upgrades, AI model maintenance, and training personnel to effectively use AI tools.

Organizations may also face unexpected costs when AI-generated errors lead to operational disruptions or security misconfigurations. Understanding the total cost of ownership is essential for organizations considering AI deployment.

The Importance of Addressing These Gaps

These missing elements are not just theoretical concerns but practical obstacles to the wider adoption of AI assistants in cybersecurity. Failing to address ethical risks, adversarial attacks, and governance structures could lead to slow adoption, or worse, significant security breaches caused by AI misconfigurations or attacks on AI systems.

Furthermore, fostering human-AI collaboration and implementing a clear AI governance framework will be critical to building trust in AI systems, ensuring that organizations can leverage these tools effectively and responsibly.

Trust and Oversight in AI Systems

AI-powered cybersecurity systems bring impressive capabilities, but they are not infallible. One of the core challenges is trust, especially as AI systems may experience “hallucinations,” where they produce inaccurate or misleading information. This can compromise threat detection accuracy, leading to false positives or even overlooking critical threats. Additionally, adversarial AI presents a new challenge, where attackers manipulate inputs to trick AI models into making incorrect predictions, exposing vulnerabilities in the system.

To mitigate these risks, human oversight and regular security audits are essential. Organizations should continuously evaluate and refine their AI models to detect and prevent adversarial AI attacks. Moreover, deploying adversarial testing can help uncover potential weaknesses, allowing cybersecurity teams to strengthen their defenses against emerging threats. Trust in AI systems can be further improved through transparency, where AI models explain their decision-making processes, making them more accountable and understandable to human operators.

Insights on AI-powered cyberattacks:

- AI-driven Social Engineering: AI tools are being used to automate research and execution in social engineering attacks, identifying vulnerable individuals within an organization and crafting highly personalized attacks. Attackers can create deepfake videos or audio mimicking executives to manipulate employees into revealing sensitive information or transferring funds. These attacks have become more sophisticated, with AI-generated multimedia often being indistinguishable from reality.

- AI-enabled Phishing: AI helps cybercriminals produce hyper-realistic phishing attempts via email, SMS, or social media. AI chatbots can simulate real-time conversations to extract sensitive information from victims. This poses a unique threat to industries heavily reliant on customer service.

- Adversarial AI: Cybercriminals use adversarial AI techniques like poisoning or evasion attacks to compromise machine learning models. These involve feeding the AI with manipulated data, leading to inaccurate predictions or decisions. For example, attackers can corrupt an AI-driven security system to bypass defenses.

- AI-enhanced Ransomware: Ransomware is evolving through the use of AI to identify vulnerabilities, encrypt data faster, and evade detection tools. It can dynamically adapt to its environment, making traditional cybersecurity defenses less effective.

Mitigation Strategies for AI-powered Cyberattacks:

- Continuous Monitoring & User Behavior Analytics: Establishing baselines for system activities and using tools like User and Entity Behavior Analytics (UEBA) can help detect AI-driven anomalies. This approach should integrate with endpoint protection and cloud monitoring.

- Advanced AI Defense Systems: Utilizing AI in cybersecurity systems themselves can aid in detecting AI-powered threats, enabling faster response times. Automated defenses can identify and neutralize adversarial AI attacks before they cause damage.

- Regular Security Audits: AI attacks evolve quickly, so continuous security assessments and proactive testing of AI models are crucial to maintaining robust defenses

Cyber Strategy Institute’s Approach: Securing AI Assistants

At the Cyber Strategy Institute, we view AI assistants as both an opportunity and a challenge for the cybersecurity industry. Our approach emphasizes the need for security at every layer, including the AI systems themselves. By focusing on secure AI development, adversarial defense, and ethical AI use, we ensure that organizations can deploy AI-driven solutions confidently and securely.

We also stress the importance of human oversight, combining AI’s computational power with human intuition and judgment to achieve optimal results. In line with our commitment to zero-trust security, we advocate for treating AI-generated recommendations as “draft only” until thoroughly reviewed and tested by human experts.

Conclusion: A Holistic View of Cybersecurity AI Assistants

While Gartner’s analysis provides a solid foundation for understanding the potential of cybersecurity AI assistants, their report misses key areas that are vital for ensuring safe, ethical, and responsible AI adoption. Issues around ethical considerations, adversarial attacks, and governance must be addressed to build trust and ensure that AI assistants can truly enhance cybersecurity without introducing new risks.

By integrating these considerations, organizations can approach AI adoption with a clearer understanding of both the opportunities and challenges, ensuring that their AI-driven solutions are secure, reliable, and compliant.

Top-11 Questions on AI Assistants in Cybersecurity:

1. How is AI transforming cybersecurity?

Answer: AI significantly enhances cybersecurity by automating threat detection, recognizing patterns in large datasets, and detecting zero-day vulnerabilities. AI tools help security teams respond faster to incidents and reduce false positives. Your article already highlights how AI improves threat detection through advanced pattern recognition and automation.

2. How do AI assistants improve threat detection and response?

Answer: In addition to automating repetitive tasks like alert triage and log analysis, AI assistants can detect sophisticated AI-driven threats such as adversarial AI attacks, AI-enhanced ransomware, and AI-generated phishing schemes. With the ability to monitor patterns and anomalies, these tools help organizations identify and mitigate advanced threats in real time.

3. Can AI assistants prevent AI-powered cyberattacks?

Answer: Yes, AI assistants can detect and respond to AI-powered cyberattacks, such as deepfake social engineering or adversarial AI. They do this by continuously monitoring behavior, identifying deviations from established baselines, and deploying advanced machine learning models to counter adversarial attempts. This capability makes them critical in defending against increasingly sophisticated AI-driven threats.

4. What are the limitations of AI in cybersecurity?

Answer: While AI can enhance threat detection and response, it also has limitations. AI systems can be vulnerable to adversarial attacks, where malicious actors manipulate the input data to cause incorrect decisions. Moreover, AI is prone to “hallucinations”—errors in judgment or prediction. Continuous human oversight and testing are required to ensure AI-generated suggestions are accurate and secure, especially in the face of AI-enhanced attacks.

5. How do AI-powered phishing attacks work, and can AI assistants defend against them?

Answer: AI-powered phishing attacks use machine learning to craft hyper-realistic emails or messages tailored to specific individuals, often mimicking executives or colleagues with alarming accuracy. AI assistants defend against these threats by analyzing communication patterns, detecting anomalies, and flagging suspicious messages in real-time. Some systems may even block phishing attempts or automate responses based on historical data.

6. Are cybersecurity AI assistants vulnerable to adversarial AI attacks?

Answer: Yes, like any AI system, cybersecurity AI assistants are susceptible to adversarial AI attacks, where malicious actors feed manipulated data to trick the system into making incorrect decisions. However, advanced defensive AI models are being developed to detect and mitigate these threats. Incorporating regular security audits and adversarial testing can minimize this risk.

7. What are some real-world applications of cybersecurity AI assistants?

Answer: Beyond assisting with incident response and threat hunting, AI assistants can now play a significant role in defending against AI-enhanced ransomware attacks, automating code and script generation for secure development, and fixing cloud misconfigurations. They also help identify critical security events across logging systems, further enhancing the speed of response to real-time threats.

8. What are the main challenges in adopting AI assistants for cybersecurity?

Answer: One significant challenge is the possibility of AI hallucinations, where systems produce inaccurate information. Additionally, AI-driven attacks, such as adversarial attacks, pose a unique threat to cybersecurity AI assistants. Organizations must ensure that the AI systems they adopt have strong defenses against such vulnerabilities. Trust and accurate decision-making also remain critical obstacles to widespread adoption.

9. How can AI assistants help improve security in cloud environments?

Answer: AI assistants can automate cloud security tasks such as fixing misconfigurations and identifying vulnerabilities. They can also mitigate AI-driven cloud attacks by monitoring real-time data and identifying abnormal patterns indicative of AI-enhanced ransomware or advanced persistent threats (APTs). These capabilities ensure faster and more effective cloud security management.

10. How do cybersecurity AI assistants manage false positives?

Answer: Managing false positives remains a challenge for AI systems. However, AI assistants are improving by using machine learning algorithms that continuously refine and learn from previous incidents. AI-driven threat detection systems are now capable of adjusting their thresholds for alerts based on evolving threat landscapes, reducing the risk of false positives while still maintaining high sensitivity to emerging AI-enhanced threats.

11. How do cybersecurity AI assistants handle emerging threats, such as AI-driven attacks?

Answer: AI assistants are specifically equipped to handle emerging AI-driven threats by utilizing predictive analytics and anomaly detection techniques. These assistants can spot AI-generated phishing attempts, identify deepfake social engineering attacks, and neutralize AI-enhanced ransomware before it spreads. Advanced machine learning models allow them to evolve alongside these threats, ensuring timely detection and mitigation.