🌎 Future is Here

Secure My "AI"

Focusing on securing the AI itself, its code, interactions, learning, infrastructure from malicious threats such as Malware or Ransomware.

- Securing the AIs Code

- Safeguarding the AI from Malicious Attacks

- Protect Your AIs Data

- Common Ground

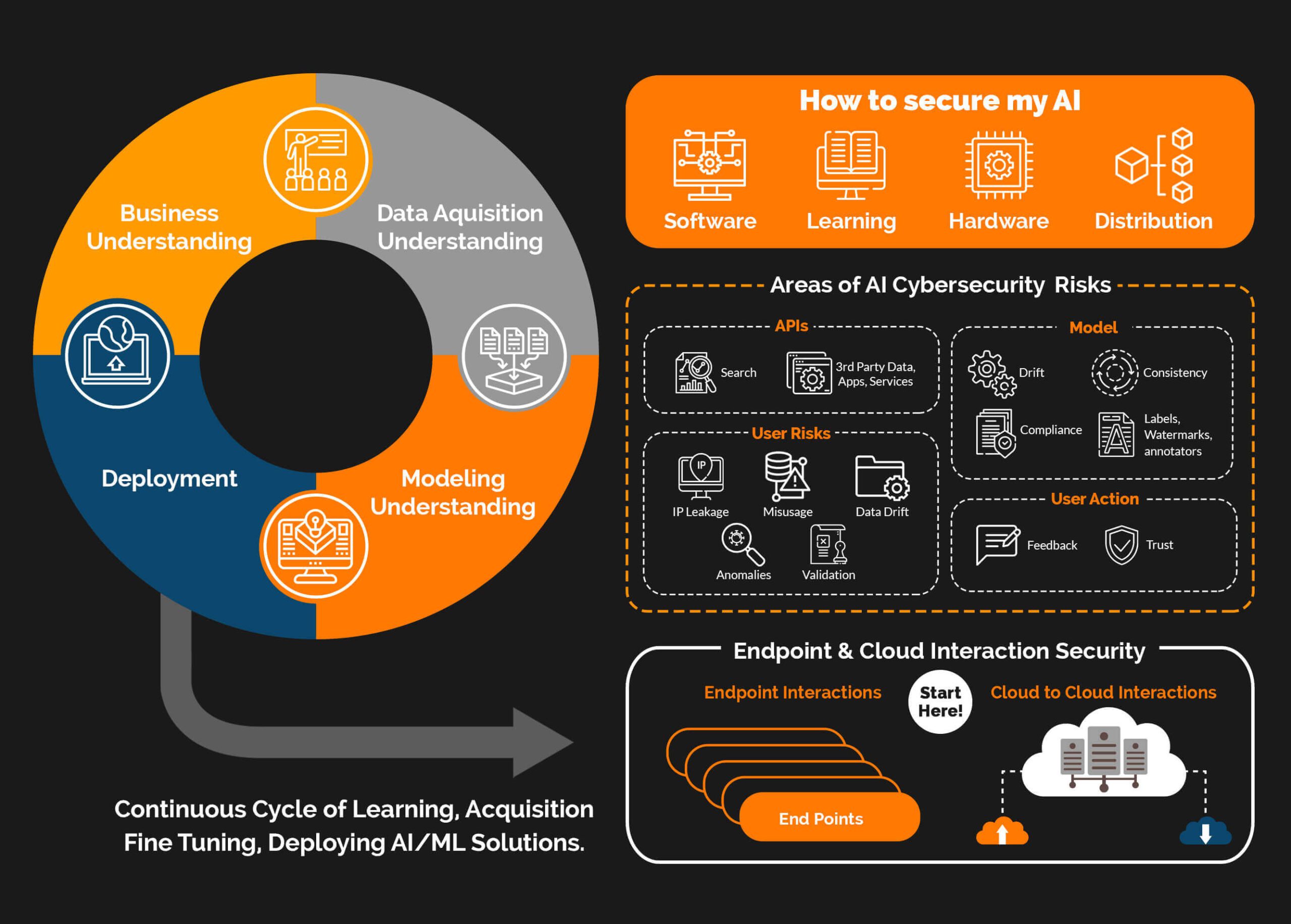

So How do I Secure My AI?

It is complex, but to simplify things down you need to look in four main areas. These four areas ensure that your AI or AIs that know more about your business than any single tool ever has becomes the most secured system you have.

Software

In order to guarantee the security of your AI software, it is imperative to perform thorough code analysis, scrutinize programming vulnerabilities, and consistently carry out security audits.

Learning

AI learning level vulnerabilities are unique and require specific measures for protection. It is crucial to safeguard databases and carefully control the data that is allowed entry. Additionally, monitoring for any abnormal performance of the model is essential.

Hardware / Devices

Without the right hardware AI models performance will faulter. Ensuring that hardware or devices are aligned with a zero trust architecture and framework is crucial for security.

Distribution

To ensure the smooth functioning of the AI model, it is crucial that each component effectively performs its designated task and seamlessly integrates the results for making accurate final decisions.

- AI Threats

Understanding AI Threats

Malware & Ransomware

An AI system is vulnerable to malware which can infiltrate and either pilfer or hold data hostage, resulting in substantial financial loss for both individuals and businesses.

Data Breaches

Hackers can illegally access an AI system and steal personal information or valuable business secrets. This can lead to serious consequences such as identity theft, financial fraud, and other harmful outcomes.

Adversarial Attacks

manipulate AI systems by adding false data or images to deceive the system and make it make wrong decisions. These attacks can bypass security measures and allow access to sensitive information.

Insider Threats

Insiders, such as employees or contractors, can purposefully or accidentally cause security breaches within an AI system. This kind of attack is particularly dangerous because insiders have detailed knowledge of the system and its vulnerabilities.

Denial of Service

AI systems can be vulnerable to attackers who can purposely flood the system with excessive traffic, ultimately leading to crashes or unavailability. Such malicious actions can have detrimental effects on business operations, resulting in significant financial losses.

Physical

It is possible for hackers to gain direct access to an AI system, thereby compromising its hardware and software components. Detecting such physical attacks can be quite challenging, and the consequences can be incredibly detrimental, inflicting substantial harm on the system.

Social Engineering

Social engineering tactics like phishing emails or phone calls can be exploited by attackers, ultimately deceiving individuals into disclosing their login credentials or other confidential data. These cunning tactics are commonly deployed to breach AI systems and unlawfully acquire valuable information.

IoT Threats

Connected AI systems can be susceptible to security threats arising from other IoT devices. Such attacks have the potential to infiltrate AI systems, leading to data breaches or even system malfunctions.

- Services

Our Comprehensive AI Security solutions

Device & File Security

Securing your devices and the files that run your AI from Malware or Ransomware are essential. That is what Warden is designed to do from the ground up, through its auto-containment of known bad and “unknown files” and not allow them to impact your system.

Software Code Review

With over 50% of all AI open sourced code having vulnerabilities, it is important to review your AIs code. We have the ability to rapidly analyze your code to find issues in multiple areas such as compliance, exposure or threats.

Verifying Your Distribution

The complexity of the infrastructure needed to deliver your AI solution either internally or externally such that it is seamless to the users while making it secure is a challenge. This is where Pentesting your solution becomes important to discover continuously risks that happen as a part of maintaining and upgrading your systems.

Guiding Your Holistic Approach

With our insights provided from protecting your devices, understanding your AI software and the distribution system supporting it we can provide you a complete prioritized view of your risks. This helps align resources, capital and effort towards getting things fixed or upgraded to resolve your risks.

Let's talk through your requirements!

Book a call with us today to talk through your challenges and risks to see how we can help.