Secure Your Autonomous AI Agents: Top FAQs for AI Agentic Frameworks

AI SAFE²: A Next-Generation Framework for Secure AI Agent Automation

(Sanitize & Isolate · Engage & Monitor · Audit & Inventory · Fail-Safe & Recovery · Evolve & Educate)

Sanitize & Scope elevates data hygiene to first priority—ensuring secrets or identifiers never slip into AI contexts—while enforcing least-privilege access.

Fail-Safe & Recovery adds automated playbooks and “kill switches” missing from many frameworks.

Engage & Monitor delivers continuous, behavior-driven oversight, detecting ghost tokens or poisoned embeddings in real time.

Evolve & Educate transforms security from a one-time project into a living program that adapts as AI threats evolve.

Introduction – AI Agent Framework

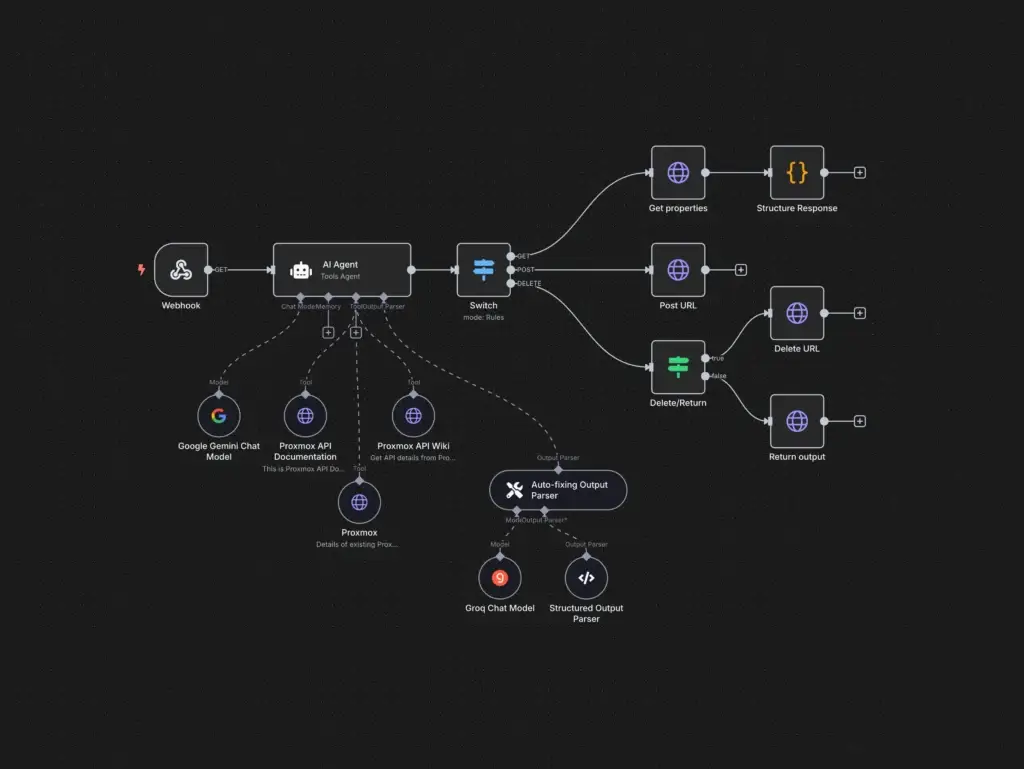

As no-code automation platforms (n8n, Make.com, Zapier) and multi-agent AI orchestration tools (AgenticFlow, Curser) pour into marketing stacks promising massive productivity gains, credential sprawl and hidden data leaks have become an existential risk. Existing frameworks like GitGuardian’s five controls (Audit, Centralize, Prevent, Improve, Restrict) lay a solid foundation but don’t fully address the chain-reaction threats inherent in agent-to-agent (A2A) or RAG-powered workflows.

AI SAFE² builds on and evolves those concepts, introducing Sanitize & Isolate and Engage & Monitor as first-class pillars—turning a static checklist into a living strategy that:

Proactively filters secrets and PII before they enter any agent or webhook

Continuously watches for abnormal behavior in chained automations

Automates recovery when compromise is detected

Embeds ongoing learning and red-teaming into your organization

Below we introduce each pillar, explain how AI SAFE² outperforms legacy models, and provide an integrated “AI Security Checklist for Automation Development” you can apply today.

Why AI SAFE² Is Different – AI Agentic Framework

Data-Centric Sanitization:

Instead of only scanning for leaked keys, AI SAFE² mandates proactive input/output filtering—masking credential patterns, removing identifiers, and reducing data granularity (e.g., rounding coordinates or stripping metadata) at every agent boundary.

Scoped, Ephemeral Access:

While traditional “least privilege” might grant a Zapier token broad “read/write” to a workspace, AI SAFE² prescribes scoped OAuth tokens (e.g., “post-only to #marketing”) and short-lived cloud roles (AWS STS, Azure AD) to limit blast radius.

Automated Recovery Playbooks:

Beyond prevention, AI SAFE² embeds automated rotation bots and pipeline circuit breakers that quarantine suspect workflows—ensuring a swift return to baseline even amid an active breach.

Runtime Behavioral Analytics:

Instead of periodic audits alone, AI SAFE²’s Engage & Monitor pillar offers real-time anomaly detection—spotting unusual API call spikes, unexpected cross-agent calls, or sudden vector-DB writes indicative of poisoning.

Continuous Red-Teaming & Education:

By integrating developer and marketer training with regular adversarial exercises (A2A impersonation, RAG leakage drills, ghost-token scenarios), AI SAFE² keeps teams vigilant to novel attack vectors.

Integrating Agentic AI Sanitization & Risk Reduction

Sanitization During Development

Data Granularity Reduction: Round or bucket sensitive fields (e.g., GPS → city-level), remove user-identifying metadata, and delete all unnecessary data before loading into knowledge bases.

Minimal Data Principle: Ingest only what is strictly needed for the automation’s logic; purge interim data immediately after use.

Identifier Stripping: Remove names, emails, session IDs, or any direct or indirect identifiers that could lead to re-identification or credential inference.

Privacy Compliance: Apply GDPR/CCPA/HIPAA controls—encrypt data at rest/in transit, maintain audit logs of dataset changes, and implement data-processing agreements for vendors.

Checks & Risk Reduction Methods

Regular security audits, penetration tests, and code reviews to uncover misconfigurations or code-level leaks.

Centralized secrets management (e.g., HashiCorp Vault, AWS Secrets Manager) with automated retrieval at runtime and no hard-coded credentials.

Continuous log monitoring for atypical patterns—unexpected API calls, repeated auth failures, or sudden data exfiltrations.

Compliance enforcement (HIPAA, CCPA): apply masking, anonymization, and strict access controls on regulated data.

| GitGuardian Control | AI SAFE² Pillar(s) | Key Enhancements |

|---|---|---|

| Audit | Audit & Inventory | Continuous mapping of agent chains, vector DBs, and webhook ecosystems |

| Centralize | Audit & Inventory + Engage & Monitor | Real-time monitoring and log correlation across platforms |

| Prevent | Sanitize & Isolate + Audit & Inventory | Boundary filtering, schema enforcement, and automated secret masking |

| Improve | Evolve & Educate | Regular red-team A2A/RAG drills, rapid feedback loops, and developer training |

| Restrict | Scope & Restrict (merged into Fail-Safe) | Scoped, ephemeral credentials; just-in-time access |

| — | Engage & Monitor | New: Behavioral analytics, anomaly detection, drift monitoring in vector stores |

| — | Fail-Safe & Recovery | New: Automated kill-switch bots, credential-rotation workflows, orchestration circuit breakers |

AI SAFE² is strategically broader, operationally deeper, and technically richer—ensuring that agents, automations, and data sources remain under tight control from design through decommission.

The Five Pillars of AI SAFE² – Secure AI Agents

1. Sanitize & Isolate

Goal: Prevent sensitive data and secrets from ever entering an agent’s context.

Data Sanitization

Reduce granularity (e.g., round GPS coords)

Delete unnecessary fields, strip PII (names, IDs)

Use minimal data in training and prompts, in compliance with GDPR/HIPAA/CCPA

Boundary Enforcement

Validate all incoming webhooks against strict JSON schemas

Parameterize queries; forbid embedding plaintext secrets in logs/prompts

Containerize each agent or workflow in isolated namespaces

2. Audit & Inventory

Goal: Maintain a live map of every machine identity, vector store, and AI chain.

Automated Discovery

Crawl CI/CD, orchestration tools, GitHub Actions, and self-hosted platforms (n8n)

Identify RAG pipelines and unprotected vector DB instances

Secret-Sprawl Scanning

Leverage GitGuardian-style scans on repos, logs, and documentation

Track credential lifetimes; rotate or revoke any static key older than 30 days

3. Fail-Safe & Recovery

Goal: Shut down or quarantine compromised automations in seconds.

Automated Incident Playbooks

“Circuit breaker” triggers that pause workflows on anomaly detection

Bot-driven credential rotation and vault updates (HashiCorp Vault, AWS Secrets Manager)

Just-In-Time Access

Dynamic IAM roles or short-lived tokens (<1 hour) instead of long-lived keys

Sandbox testing environments for safe rollback and re-run

4. Engage & Monitor

Goal: Detect deviations, poison attempts, and ghost-token persistence in real time.

Runtime Analytics

Track API call volumes, destinations, and rates per agent

Monitor vector DB query patterns for unusual spikes or drift (possible poisoning)

Log Correlation

Centralize logs from n8n, Make.com, Zapier, and all AI services

Implement regex-based secret-scrubbing on logs before storage

Alert on any response matching credential patterns or unexpected data flows

5. Evolve & Educate

Goal: Build organizational resilience through continuous learning.

Red-Team Exercises

A2A impersonation drills (fake MCP endpoints)

RAG leakage table-tops (simulate asking for a private key)

Training & Governance

Workshops on sanitization best practices in n8n/Make.com

Quarterly policy reviews for AI tool usage and data classification

AI-SAFE2 Integrated AI Security Checklist for Autonomous Agents

| Category | Controls & Actions |

|---|---|

| Data Sanitization | • Round coordinates, mask or remove IDs |

| • Delete unnecessary fields before ingestion | |

| • Enforce JSON-schema validation on all inputs | |

| Sanitization During Dev | • Use minimal training data; encrypt and tokenize sensitive fields |

| • Use n8n/Make.com external-secrets or encrypted storage modules | |

| • Regex-based scrubbing on logs and outputs | |

| Model Security | • Adversarial testing and backdoor detection |

| • Regularly check for poisoned data in training sets | |

| • Limit query rates; deploy explainability tools for anomaly detection | |

| Secret Management | • Centralize in Vault (HashiCorp, AWS Secrets Manager) |

| • Rotate keys < 1 hr; forbid hard-coding in scripts | |

| • Assign unique identities and scopes per automation | |

| Least Privilege & Scope | • JIT role assumption (AWS STS, GCP short-lived creds) |

| • Scoped OAuth tokens per integration (e.g. Slack channel only) | |

| Monitoring & Alerts | • Behavioral analytics on API calls, volume, destinations |

| • Vector DB drift detection | |

| • Real-time alerts on secret-pattern matches in logs | |

| Fail-Safe Mechanisms | • Automated “kill switches” in orchestration (Zapier, Make.com) |

| • Credential-rotation bots triggered by anomalies | |

| • Sandbox rollback processes | |

| Incident Response | • Runbooks for token revocation, DB quarantine, and workflow pause |

| • Defined roles and quarterly drills | |

| Compliance & Governance | • Enforce GDPR, HIPAA, CCPA compliance via data classification |

| • AI tool usage policy; block public AI for regulated data | |

| Third-Party Management | • Security vetting of plugins and connectors |

| • Controlled access and monitoring of third-party endpoints | |

| User Education | • Phishing and strong-password training for non-tech users |

| • Developer workshops on sanitization and scoped credentials | |

| Continuous Improvement | • Quarterly security audits and red-teaming |

| • Update checklist based on new A2A/RAG threats | |

| • Participate in cybersecurity forums for emerging best practices |

User Checklist – AI Security for Automation Development: The AI Agent Framework

Use this checklist for every new agent, workflow, or AI integration.

Design & Data Classification

Map end-to-end data flows; classify each element (public, internal, sensitive).

Identify all service accounts, vector DBs, RAG endpoints, and webhook URLs.

Sanitization & Input/Output Controls

Enforce JSON/schema validation on all inbound webhooks; reject unexpected fields.

Apply regex-based scrubbers on outputs/logs to mask credential patterns.

Reduce data granularity; remove unnecessary metadata/identifiers.

Secret Management & Scoping

Store all secrets in a centralized vault; retrieve at runtime using ephemeral tokens.

Assign minimal scopes per agent (e.g., “post-only,” “read-only,” “channel-specific”).

Enforce MFA/2FA on all related human and service accounts.

Fail-Safe Mechanisms

Implement automated credential-rotation scripts triggered by anomaly alerts.

Build circuit breakers to pause or rollback workflows on threshold breaches.

Maintain incident response playbooks for leaks, poisoning, and unauthorized access.

Engagement & Monitoring

Instrument real-time telemetry on API usage, errors, and deviation from normal patterns.

Monitor vector-DB access logs for unusual reads/writes; enable alerting on suspicious queries.

Audit cross-agent calls to detect A2A impersonation or unauthorized chaining.

Validation & Red-Teaming

Run RAG leakage tabletop exercises (simulate a prompt that asks for hidden keys).

Test A2A spoofing (craft fake agent endpoints and confirm real agents reject them).

Pen-test orchestration chains for ghost-token persistence scenarios.

Governance & Education

Train non-technical users (marketers, growth hackers) on AI-SAFE² principles and sanitization practices.

Enforce policy against uploading regulated or classified data into public AI tools.

Schedule quarterly reviews of all AI automations, risk posture, and checklist updates.

Conclusion – Agentic AI Framework

AI SAFE² transforms a static set of controls into a dynamic, five-pillar strategy that starts at design (Sanitize & Isolate), ensures full visibility (Audit & Inventory), automates recovery (Fail-Safe & Recovery), embeds real-time oversight (Engage & Monitor), and drives continuous learning (Evolve & Educate). By integrating sanitization best practices, JIT credentials, behavioral monitoring, and developer-focused checklists, CSI’s new framework keeps your AI automations fast, flexible, and—most importantly—secure.

AI Automation & Agent Security FAQs

Q1: What is AI security?

AI security is the discipline of protecting AI-powered workflows and their underlying data—from threats like credential leakage, prompt-injection, and model poisoning—while leveraging AI techniques to bolster overall defenses.

Data hygiene: Enforce input/output sanitization to mask or strip secrets before they enter any agent context.

Behavioral monitoring: Deploy real-time analytics to spot anomalous API call spikes or ghost-token persistence.

Automated resilience: Embed “circuit breakers” and rotation bots to quarantine compromised workflows instantly.

Relevant Tools: JSON-schema validators, SIEM/SOAR platforms, HashiCorp Vault

Q2: Should we wait for AI to mature before adopting it?

No—delaying AI adoption only widens the competitive gap; instead, deploy with a risk-based rollout that pairs phased enablement with built-in security controls.

Start small: Pilot one or two automations in an isolated dev/test environment.

Enforce basics: Apply immediate sanitization and scoped credentials before scaling.

Iterate with feedback: Use continuous red-teaming and telemetry to refine protections.

Relevant Frameworks: AI Risk Management Framework (RMF), NIST CSF

Q3: What resources help beginners start their AI security journey?

Beginners should leverage established security frameworks (NIST CSF, AI RMF), open-source tooling for secrets scanning, and concise threat-modeling guides tailored to AI workflows.

Frameworks: Use NIST CSF and AI Risk Management Framework as your security blueprint.

Tooling: Enable GitHub Secret Scanning, Dependabot, and JSON schema validators from day one.

Guides: Follow Cyber Strategy Institute’s AI SAFE² introduction and threat-modeling checklists.

Relevant Tools: GitHub Advanced Security, Gitleaks, MAESTRO/SAIF templates

Q4: How do you adopt AI and mitigate associated risks?

Adopt AI by integrating security at each stage—sanitize all inputs, enforce least-privilege credentials, continuously monitor behavior, and automate recovery when issues arise.

Sanitization: Apply JSON-schema enforcement and redact any PII or secret patterns in logs/prompts.

Scoped Access: Issue short-lived, purpose-limited tokens instead of broad, long-lived keys.

Monitoring & Recovery: Instrument runtime analytics and configure automated circuit breakers.

Relevant Components: AI SAFE² pillars: Sanitize & Isolate, Scope & Restrict, Engage & Monitor

Q5: Who is responsible when AI produces wrong or harmful outputs?

Accountability spans AI developers (for secure prompt engineering and sanitization), operations teams (for monitoring and incident response), and leadership (for governance and policy enforcement).

Developers: Ensure prompts are scrubbed of secrets and malicious payloads before use.

Operators: Watch telemetry and enact automated playbooks on anomalies.

Leadership: Define clear AI governance policies, roles, and training programs.

Relevant Roles: AI engineers, DevOps/SecOps, CISO/Compliance

Q6: What’s the risk of using AI-generated data that may infringe copyrights or patents?

AI can inadvertently reproduce copyrighted or patented material, exposing organizations to legal liability if outputs aren’t vetted or sanitized prior to use.

Human Review: Always have a compliance check before deploying AI content publicly.

Dual-Model Validation: Cross-reference outputs against a licensed corpus to detect infringements.

Plagiarism Tools: Integrate detection services to flag any near-duplicate or unlicensed passages.

Relevant Tools: Copyscape, Turnitin, in-house similarity scanners

Q7: How can I enable generative AI without saying “no” to my teams?

Adopt a “yes, but” approach: allow pilots in isolated environments but require documented risk assessments, time-boxed trials, and mandatory sanitization procedures.

Pilot Spaces: Create dev/test AI sandboxes with no production data access.

Risk Docs: Require simple risk checklists before any new AI workflow goes live.

Time-Boxed Trials: Limit pilot durations and review security metrics before expanding.

Relevant Practices: AI SAFE² Engage & Monitor, Evolve & Educate

Q8: How can I ensure prompts are sanitized before LLM processing?

Prompt sanitization uses automated scrubbers and schema-driven filters to strip secrets, PII, and malicious payloads before they reach the LLM.

Regex Scrubbing: Apply patterns like

/api[_-]?key/ito redact keys in real time.Schema Enforcement: Reject or sanitize any input fields not defined in your JSON schema.

Post-Entry Monitoring: Log raw prompts in a secure store, scan for violations, and alert on matches.

Relevant Tools: JSON-schema validators, custom scrub-scripts, centralized logging

Q9: What questions should I ask when assessing an AI vendor?

Key vendor assessment questions cover data residency, security architecture, incident response, and their built-in controls for agent and secret management.

Data Handling: Where is customer data stored and how is it encrypted at rest/in transit?

Security Controls: Do they provide built-in sanitization, scoped tokens, and real-time monitoring?

Incident Response: What are their breach notification SLAs and playbooks for agent compromise?

Relevant Standards: ISO 27001, SOC 2, vendor security white papers

Q10: Can AI be used for security automation, and what are the impacts?

Yes—AI-driven security automation accelerates threat detection, orchestrates rapid response actions, and reduces analyst fatigue by filtering false positives.

Threat Detection: Use AI to analyze log patterns and flag anomalies faster than rule-based systems.

Automated Response: Combine with SOAR playbooks to auto-isolate compromised assets or rotate keys.

Noise Reduction: Leverage ML to correlate events and suppress low-value alerts for human review.

Relevant Integrations: AI-powered EDR/XDR, SIEM platforms with ML modules

Q11: What is shadow AI (or shadow IT) and how do I manage and secure AI Agents?

Shadow AI refers to unsanctioned AI tools or workflows accessing corporate data outside official controls, which must be managed through discovery, policy enforcement, and safe alternatives.

Discovery: Scan network logs and SaaS apps for unapproved AI API traffic.

Policy & Enforcement: Define approved tool lists and block others at your network or proxy.

Safe Alternatives: Provide vetted, secure AI sandboxes so teams aren’t tempted to go rogue.

Relevant Practices: DLP rules, network proxy filtering, sanctioned AI catalogs

Q12: What are the most popular AI agent frameworks for building autonomous agents?

The leading AI agent frameworks provide orchestration, tool integrations, and lifecycle management to simplify the creation of autonomous agents.

LangChain & LlamaIndex: Open-source frameworks for chaining LLM calls, plugging in vector DBs, and crafting complex agentic workflows.

AgenticFlow & Curser: No-code/low-code platforms tailored for marketers, offering prebuilt connectors (Slack, CRM, email) and A2A orchestration.

n8n & Make.com: Extendable automation frameworks that support custom “Function” or “Code” steps for AI model calls, enabling hybrid human–agent workflows.

Relevant Frameworks: LangChain, AgenticFlow, n8n

Q13: How do multiple AI agents interact in complex workflows without causing conflicts?

Well-designed agentic frameworks enforce scoped contexts, naming conventions, and handshake protocols to coordinate multiple agents seamlessly.

Scoped Contexts: Assign each agent its own data silo or namespace to prevent unexpected data bleed between agents.

Handshake Protocols: Define clear request/response schemas (JSON-schema) so one agent’s output always matches another’s input contract.

Orchestration Engine: Use a central orchestrator (e.g., Make.com or AgenticFlow) that enforces sequence, retries, and error handling across “multiple agents.”

Relevant Components: JSON-schema validators, orchestrators like Make.com, namespace isolation

Q14: How can I deploy AI agents without compromising security or exposing secrets?

Secure deployment of AI agents relies on ephemeral, least-privilege credentials and automated secret-management pipelines.

Ephemeral Tokens: Use short-lived IAM roles (AWS STS, Azure AD) or scoped OAuth tokens that expire automatically.

Secrets Vaulting: Retrieve API keys at runtime from a centralized vault (HashiCorp Vault, AWS Secrets Manager) rather than embedding them.

Circuit Breakers: Incorporate Fail-Safe playbooks that automatically rotate or revoke credentials if anomalous behavior is detected.

Relevant Tools: HashiCorp Vault, AWS STS, AI SAFE² Fail-Safe & Recovery

Q15: Which open-source frameworks are best for building and managing AI agents?

Open-source frameworks combine flexibility, community support, and the ability to self-host for full control over data and model deployments.

LangChain: Provides a modular “AI agent framework” with connectors for vector DBs, chat models, and tool use.

Autogen & AgentOS: Focus on multi-agent orchestration with built-in conflict resolution and memory plugins.

n8n (self-hosted): Extendable via custom nodes—ideal for teams that need an “open-source framework for building” business automations with AI steps.

Relevant Frameworks: LangChain, Autogen, n8n

Q16: How do I monitor and tune agent behavior and performance in production AI systems?

Continuous telemetry, anomaly detection, and periodic red-teaming are key to ensuring your AI agents operate reliably and securely.

Runtime Analytics: Instrument every API call’s volume, latency, and success/failure rates; alert on deviations from baseline patterns.

Drift Monitoring: Track vector DB query distributions and model output similarities to detect poisoning or performance degradation.

A/B Testing & Red-Teaming: Regularly run controlled experiments and adversarial drills (A2A impersonation, RAG leak tests) to surface hidden weaknesses.

Relevant Tools: SIEM/SOAR platforms, ML drift detectors, AI SAFE² Engage & Monitor

Q17: What core security policies should govern my AI agent deployments for compliance and risk reduction?

Effective AI governance combines access controls, data classification, and incident-response requirements tailored to autonomous agents.

Data Classification & Sanitization: Mandate PII/credential masking, minimal data ingestion, and schema-validated inputs for all agents.

Access & Privilege Policies: Enforce least-privilege scopes, short-lived credentials, and periodic access reviews for each agent’s service account.

Incident Response & Audit: Require runbooks for ghost-token scenarios, RAG poisoning, and credential leaks, plus immutable audit logs for every agent action.

Relevant Policies: AI SAFE² Sanitize & Isolate, Scope & Restrict, Fail-Safe & Recovery